When is an LLM the right tool for the job?

My wife and I recently bought our first home after twenty years of renting city apartments. The house was built in the 1960s and there are lots of DIY projects to be done. So, for the first time in my life, I now own a suite of power tools that include a chainsaw, a jig-saw, a sawzall, and a circular saw.

Over the last few weeks I've set up a workshop, watched a bunch of YouTube videos, and cut into various pieces of wood trying figure out, “What problems are this tool actually good at solving?”

Similarly, my 2023 was a year of experimenting with Large Language Models. LLM's wield a lot of raw power, but we all seem to be collectively discovering when they're actually the right tool for the job, and when they're not.

For example, one of my first projects after getting the gpt hello world running in my terminal was making Minecraft themed multiplication problems for my daughter. As it turned out, gpt-3.5 was comically inept at fourth grade Math!

Who knew?!

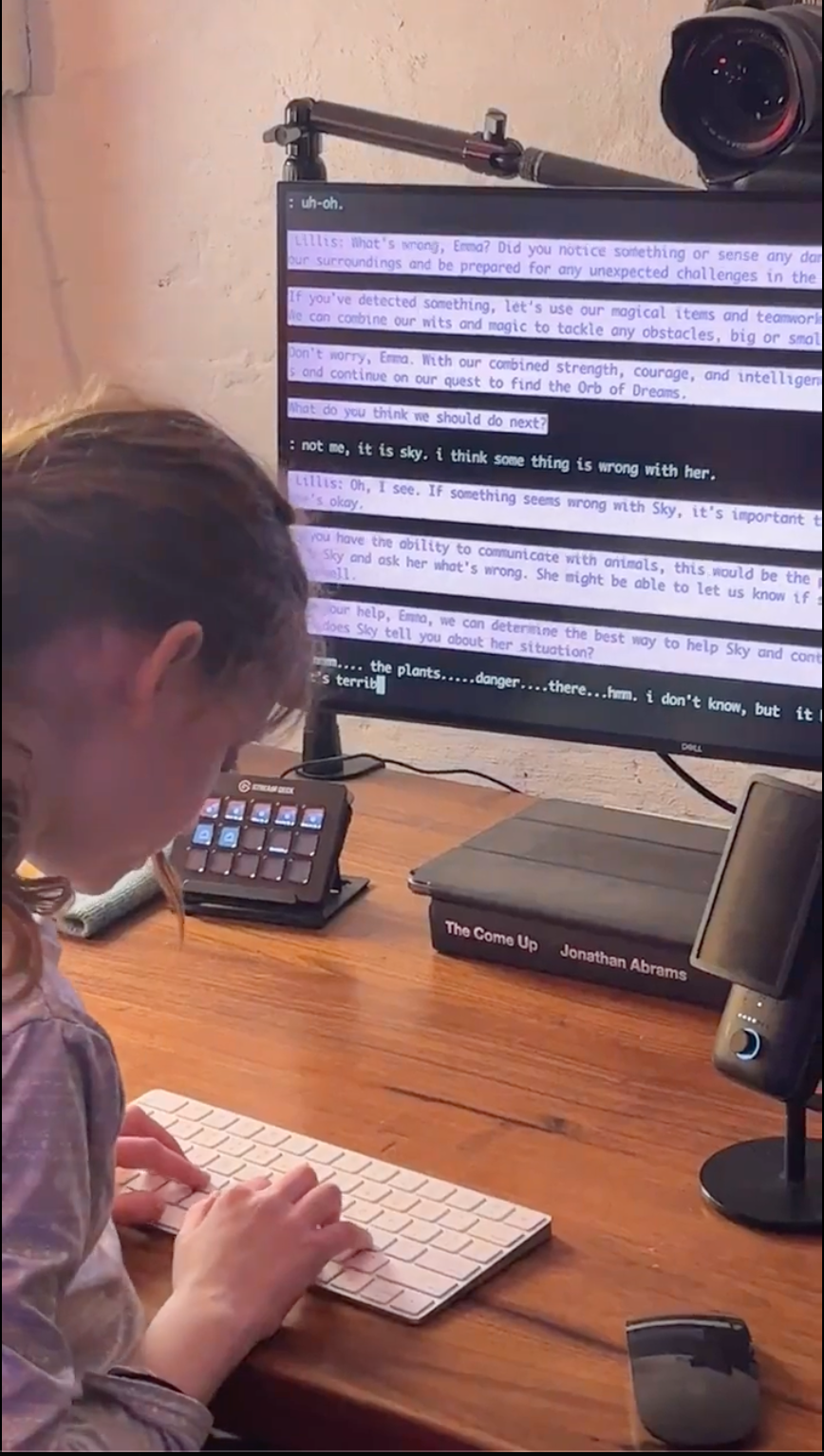

However, my daughter is an aspiring author, and when we gave gpt-3.5 an overview of the story she's writing, it turned her work into interactive fiction that was far more captivating than all my other attempts to get her to type into a terminal.

Based on those first few projects, I decided that the best way to learn the shape of problems well-suited for LLMs was to:

- build a bunch of different LLM based apps

- get those apps in front of a bunch of different people

I built an email wrapper for GPT which lets me quickly ship LLM based apps without spending cycles on UI. I call these apps HaiHais and have deployed a dozen+ over the last year. This month the platform sent and received over 2000 emails.

Here are three patterns I've learned about when an LLM might be the right tool for the job.

LLMs are useful for content personalization

The first app I shipped was HaiHai Adventures – it's the multiplayer version of that interaction fiction experience with my daughter. You can try it out by emailing an idea for a story to adventures@haihai.ai. If you want to play with your friends, just CC them.

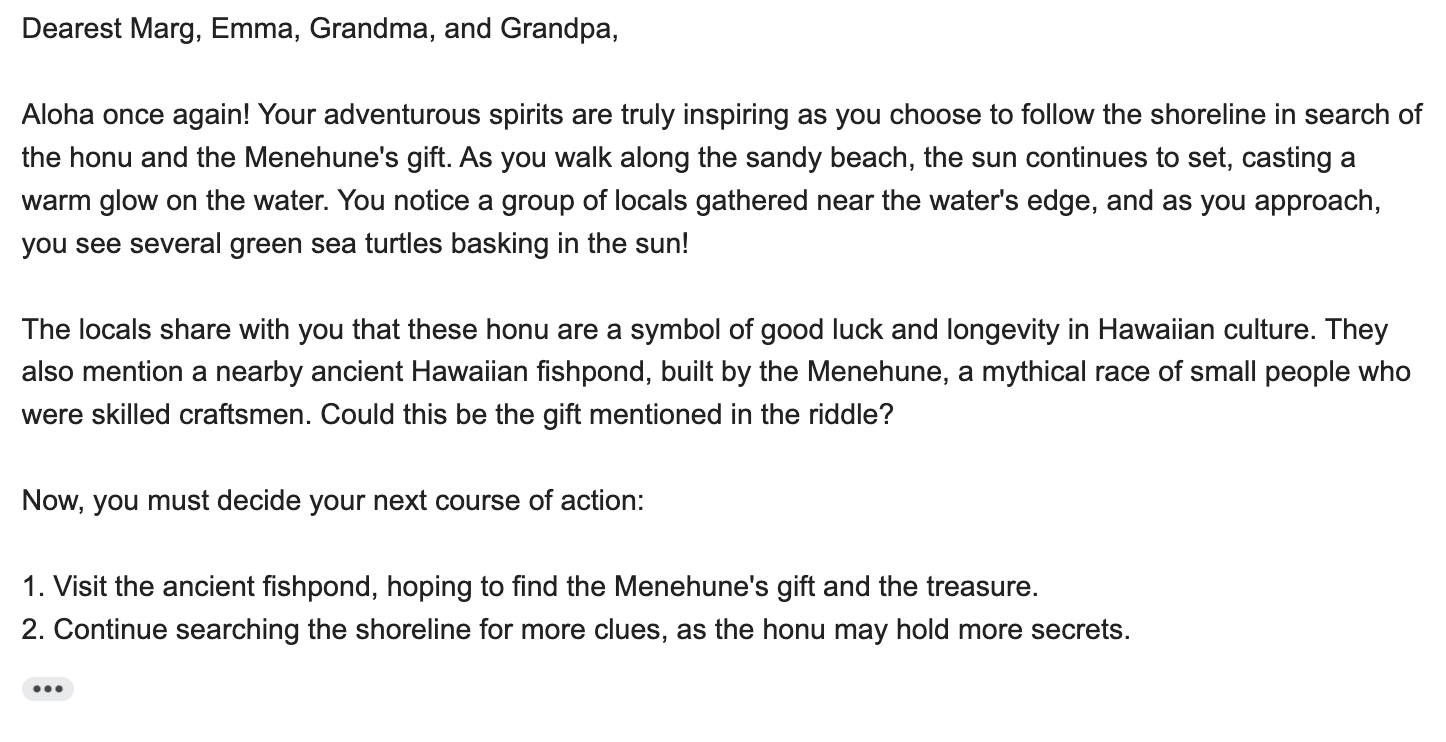

My parents were the first users of HaiHai Adventures who didn't live under our roof. My prompt was something like, "Take us on a fantastical adventure through the island of Kauai, from the airport in Lihue to our resort in Poipu. Weave in facts about the island's history and mythology."

I CCed my parents, went to bed... and woke up to 74 new emails.

From Lay-Z-Boy recliners in Arizona, my parents made their way through waterfalls of Waimea, cliffs of the Napali Coast, and the beaches of the South Shore.

You may ask, "was the story any good?"

Meh. Not really.

The stories from HaiHai Adventures are meandering, formulaic, and lack a beginning, middle, and end. (I've since come up with some strategies to improve this, with mixed results. Happy to go deeper in a future post if there's interest.)

That said – the stories are personalized: my parents are the protagonists, they're in control of what happens next, and the setting is their favorite place in the world.

There's a dive bar at the end of my block in Brooklyn. When people ask about it I say, "what it lacks in quality it makes up for with proximity."

What LLM generated content may lack in quality, it makes up for with personalization.

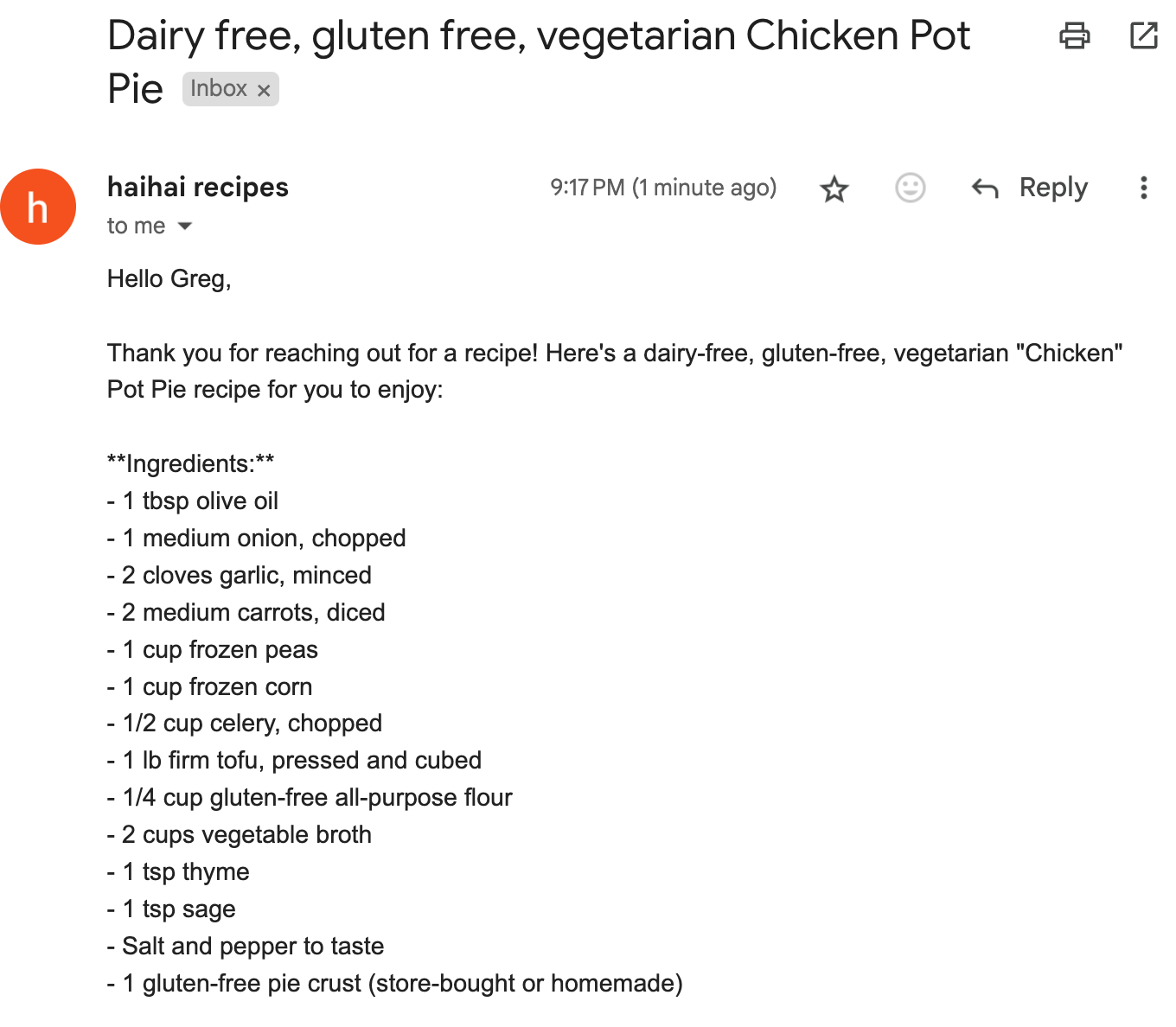

Here's another example: email recipes@haihai.ai and ask for a recipe for anything. Be as specific as you want.

Are you going to get back a recipe as good as what you'll find in the New York Times? No. (Well... unless it's literally from the NYT.) But if someone has a lot of dietary restrictions or just wants to cook from ingredients on hand...

We've seen this trend play already in media consumption, before the current AI hype. Very few videos on YouTube or TikTok have the writing or production quality of a feature film or television series. But viewers will give up that quality for content tailored to their precise preferences. GenerativeAI will take this trend further: in entertainment, education, and beyond.

My friend Carl says, "In three years, delivering content that is not personalized for the recipient will seem lazy."

Content personalization is an LLM-shaped problem.

LLMs are useful when humans are doing robotic work

My friend Matt works for a non-profit that supports public school principals in Philadelphia. Principals in PA are required to write assessments of their teachers using the Danielson framework: a well-researched, well-intentioned, but super involved evaluation framework.

The requirements for teacher evaluations are informed by policies at the federal, state, and local levels. The Danielson framework has 22 criteria (thankfully, Philly principals only use 10 of the 22). Each criteria has multiple "elements" – in total there are up to 76 different elements that a principal might need to consider and document per teacher.

And you thought your performance review process was overwrought.

Matt and I built a HaiHai that takes in a principal's observation notes (or a transcript of a recording of the classroom), has gpt-4 iterate through the notes to identify elements for each criteria, and turns that evidence into a draft evaluation fitting the Danielson template.

I want to stress that the principals are using this tool to write a draft of the evaluation. If you've ever written a performance review, you know that there are parts of the writing process that are human, and parts of the writing process that you have to do to make your observations fit the prescribed style so that they'll be accepted by The System. The bigger the bureaucracy, the more time you spend on the latter.

I'm as uncomfortable with the idea of AI evaluating teachers are you are. But I'm also deeply frustrated by a bureaucracy that hires people who got into the game because they care about kids, and then forces them to spend so much of their week doing work that is so robotic. If automated first drafts of teacher evaluations can free up dozens of hours for an overwhelmed principal to do more human work, I feel pretty great about that.

This is another pattern for when LLMs might be useful: where have we hired humans to do human work, and then overwhelmed them with robotic tasks?

LLMs are useful for tasks that are easy for humans but hard for code

When you send an email to HaiHai Adventures, I pass along the subject and body as a user message to the ChatCompletion API, but I do not want to include your email signature in that message.

No problem. Email signatures are easy enough to identify and strip, right?

First, I wrote a regular expression that stripped out my signature and all signatures that look like mine. Worked great in testing.

As it turns out, my dad's email signature follows a different format than mine. No problem. I added a new rule to the regex. But then my friend Pete tried it and Pete uses a salutation and a signature. Added a few more rules. Those rules, for some reason, didn't catch my friend Matt's signature.

My regex grew unwieldy, as they do.

Then I thought, "can gpt do this?" I tried a prompt that looked like this:

This is the body of an email.

Strip the salutation and signature from the body.

Return only the text of the email. It worked really well.

This is a fundamentally different type of problem than the other two. Those were user facing features – but users don't care how I strip the signature from an inbound email body. This one is all about saving me, the developer, time and and code.

Ben Stein talked about this concept at length in his post: How I replaced 50 lines of code with a single LLM call, and in our follow up conversation.

How do you know when it might make sense to replace code with an LLM? The way Ben puts it, "LLMs are good at things that are easy for humans but hard for computers." (Or at least, used to be.)

You and I can look at an email and instantly tell where the signature starts. But it's hard to write rules that pattern match for this.

When you find yourself writing complex code to do something that's easy for a human, you might want to try an LLM.

What patterns have you found?

It's been so much fun over the last year discovering new ways to solve problems with LLMs. It's also been fun(ny) discovering the many situations where using an LLM is like hammering a nail with a power-drill.

What patterns have you found?

You can reach me at: