GPT-4 exhibits "sparks of general intelligence"

A team from Microsoft Research published a paper yesterday claiming that GPT-4 demonstrates "sparks of artificial general intelligence."

The paper is 154 pages long, including citations and figures. For anyone wondering "what can this thing do?", reading through the list of tests they put GPT-4 through is inspiring. Their methodology seems thorough:

Here's some of the key quotes from the introduction:

We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting. Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.

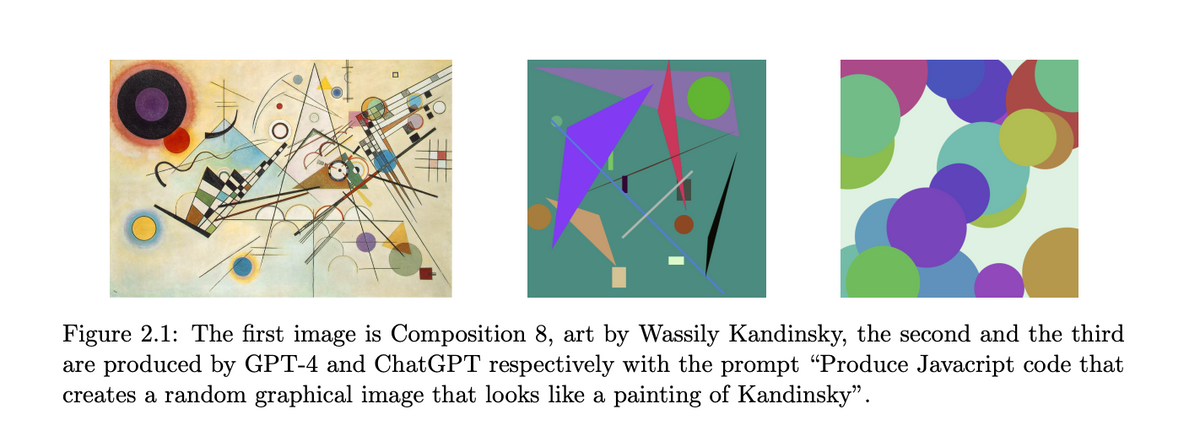

It's a huge advance over even ChatGPT

We also compare GPT-4’s performance to those of previous LLMs, most notably ChatGPT... we display the results of asking ChatGPT for both the infinitude of primes poem and the TikZ unicorn drawing. While the system performs non-trivially on both tasks, there is no comparison with the outputs from GPT-4. These preliminary observations will repeat themselves throughout the paper, on a great variety of tasks. The combination of the generality of GPT-4’s capabilities, with numerous abilities spanning a broad swath of domains, and its performance on a wide spectrum of tasks at or beyond human-level, makes us comfortable with saying that GPT-4 is a significant step towards AGI.

GPT-4 isn't AGI yet

Our claim that GPT-4 represents progress towards AGI does not mean that it is perfect at what it does, or that it comes close to being able to do anything that a human can do (which is one of the usual definition of AGI...), or that it has inner motivation and goals (another key aspect in some definitions of AGI). In fact, even within the restricted context of the 1994 definition of intelligence, it is not fully clear how far GPT-4 can go along some of those axes of intelligence, e.g., planning, and arguably it is entirely missing the part on “learn quickly and learn from experience” as the model is not continuously updating (although it can learn within a session). Overall GPT-4 still has many limitations, and biases, which we discuss in detail below and that are also covered in OpenAI’s report.

but GPT-4 is very big deal

GPT-4 challenges a considerable number of widely held assumptions about machine intelligence, and exhibits emergent behaviors and capabilities whose sources and mechanisms are, at this moment, hard to discern precisely (see again the conclusion section for more discussion on this). Our primary goal in composing this paper is to share our exploration of GPT-4’s capabilities and limitations in support of our assessment that a technological leap has been achieved. We believe that GPT-4’s intelligence signals a true paradigm shift in the field of computer science and beyond.

From the paper's conclusion:

We have focused on the surprising things that GPT-4 can do, but we do not address the fundamental questions of why and how it achieves such remarkable intelligence. How does it reason, plan, and create? Why does it exhibit such general and flexible intelligence when it is at its core merely the combination of simple algorithmic components—gradient descent and large-scale transformers with extremely large amounts of data? These questions are part of the mystery and fascination of LLMs, which challenge our understanding of learning and cognition, fuel our curiosity, and motivate deeper research.

And the acknowledgments:

We thank OpenAI for creating such a marvelous tool and giving us early access to experience it.

What strikes me is how this team of researchers is speaking in terms of mystery, and marvel about a piece of software. This is something different. Something new.

Shoutout to the team of researchers from Microsoft Research who put this paper together:

- S´ebastien Bubeck

- Varun Chandrasekaran

- Ronen Eldan

- Johannes Gehrke

- Eric Horvitz

- Ece Kamar

- Peter Lee

- Yin Tat Lee

- Yuanzhi Li

- Scott Lundberg

- Harsha Nori

- Hamid Palangi

- Marco Tulio Ribeiro

- Yi Zhang